RehabPal: Virtual Rehabilitation

and Balance Trainer using MediaPipe

Introduction

Methodology

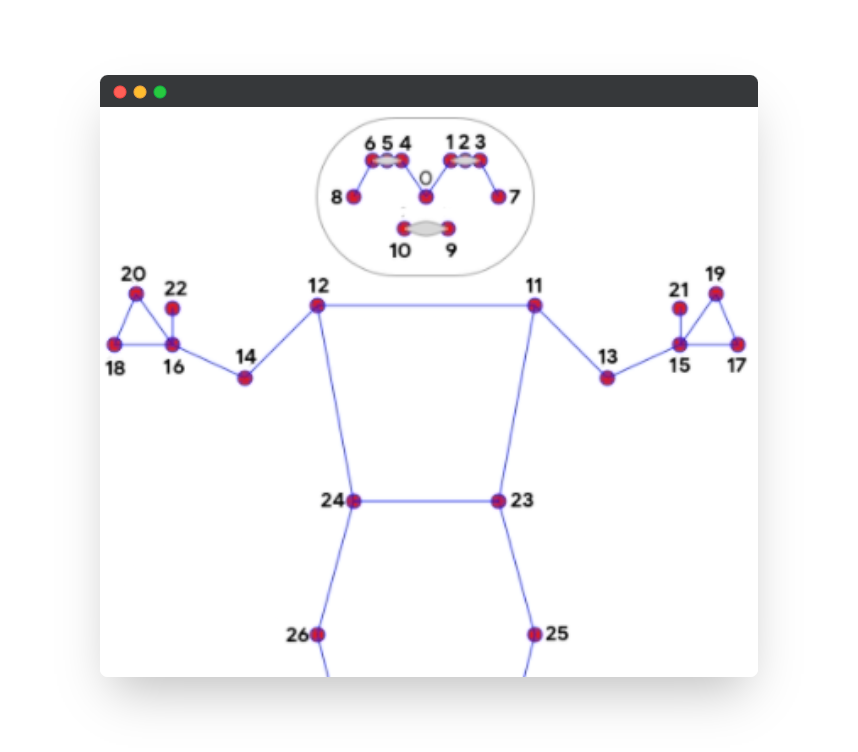

The project was implemented using Python within the Jupyter Notebook environment, leveraging the built-in webcam of the laptop to provide real-time video feed for guided assistance. The initial steps involved importing essential libraries and frameworks, such as OpenCV, MediaPipe, and NumPy. To establish the landmark coordinates and visibility logic, an analysis of the MediaPipe joint map was conducted, followed by the loading of a sample video feed. After assigning the respective joint coordinates, a custom function was employed to compute joint angles. Subsequently, the code progressed to establish the logic for the curl counter based on timers that were dynamically updated throughout the code execution. These functions collectively contributed to the development of the underlying paradigm, as discussed further below in the methodology section.

Operational Flow: The program initiates by welcoming the user and provides guidance through various exercise paradigms tailored to the therapist’s recommendations or the user’s specific needs. Each exercise commences with an on-screen notification, preparing the user for its initiation. Throughout the exercise, the program monitors the relevant landmarks and joint angles. The repetition count is dynamically updated and displayed as feedback. Additionally, suggestions and feedback on the range of movement are presented, encouraging users to adjust their motion range while maintaining correct form, thereby mitigating the risk of injuries. Real-time feedback on the correct form for each repetition is displayed in green as “CORRECT” on the screen. Upon the completion of the specified repetitions, the program seamlessly guides the user to the next exercise until the entire rehabilitation session is finished, concluding with congratulations to the user for successfully completing the session. The current supported exercises include: Shoulder Extension, Shoulder Flexion, Shoulder Abduction, Shoulder Adduction, Elbow Extension, Elbow Flexion, Knee Flexion, Knee Extension, Chair Sit-to-Stands, Berg-Balance Test.

The code for RehabPal can be found below:

import cv2

import mediapipe as mp

import numpy as np

import time

# Function to calculate angle (keep this function as is)

def calculate_angle(a, b, c):

a = np.array(a)

b = np.array(b)

c = np.array(c)

radians = np.arctan2(c[1]-b[1], c[0]-b[0]) - np.arctan2(a[1]-b[1], a[0]-b[0])

angle = np.abs(radians*180.0/np.pi)

if angle > 180.0:

angle = 360 - angle

return angle

# Setup mediapipe instance

mp_pose = mp.solutions.pose

mp_drawing = mp.solutions.drawing_utils

cap = cv2.VideoCapture(0)

# Curl counter variables

elbow_counter = 0

shoulder_counter = 0

knee_counter = 0

elbow_stage = None

shoulder_stage = None

knee_stage = None

# Flag to indicate if the ready phase is active

ready_phase = True

# Flag to indicate the current exercise phase

exercise_phase = None

# Timer for ready phase and exercise phase

ready_timer = 50

exercise_timer = 0

# Flag to control landmark visibility during exercise phase

show_landmarks = True

# Colors for text and box

text_color = (255, 255, 255)

box_color = (0, 0, 255)

# Main loop

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

exercise_number = 1 # Initialize exercise number

correct_flag = 0

start_time = None

while cap.isOpened():

ret, frame = cap.read()

# If ready phase is active

if ready_timer > 0:

ready_timer -= 1

cv2.rectangle(frame, (120, 200), (500, 280), box_color, -1)

cv2.putText(frame, 'Ready to begin Rehab', (140, 250),

cv2.FONT_HERSHEY_SIMPLEX, 1, text_color, 2, cv2.LINE_AA)

else:

if exercise_phase is None:

exercise_phase = 'Shoulder'

exercise_timer = 50

show_landmarks = True

start_time = time.time() # Start the timer

# If exercise phase is active

if exercise_timer > 0:

exercise_timer -= 1

cv2.rectangle(frame, (40, 200), (610, 280), (0, 255, 255), -1)

cv2.putText(frame, f'Exercise {exercise_number}: {exercise_phase} Strengthening', (50, 250),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0,0,0), 2, cv2.LINE_AA)

show_landmarks = False

else:

# Reset show_landmarks flag when exercise phase ends

show_landmarks = True

# Recolor image to RGB

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Extract landmarks (keep this part as is)

landmarks = results.pose_landmarks.landmark

landmarks = results.pose_landmarks.landmark

shoulder_l = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow_l = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist_l = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]

hip_l = [landmarks[mp_pose.PoseLandmark.LEFT_HIP.value].x,landmarks[mp_pose.PoseLandmark.LEFT_HIP.value].y]

knee_l = [landmarks[mp_pose.PoseLandmark.LEFT_KNEE.value].x,landmarks[mp_pose.PoseLandmark.LEFT_KNEE.value].y]

ankle_l = [landmarks[mp_pose.PoseLandmark.LEFT_ANKLE.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ANKLE.value].y]

shoulder_r = [landmarks[mp_pose.PoseLandmark.RIGHT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.RIGHT_SHOULDER.value].y]

elbow_r = [landmarks[mp_pose.PoseLandmark.RIGHT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.RIGHT_ELBOW.value].y]

wrist_r = [landmarks[mp_pose.PoseLandmark.RIGHT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.RIGHT_WRIST.value].y]

hip_r = [landmarks[mp_pose.PoseLandmark.RIGHT_HIP.value].x,landmarks[mp_pose.PoseLandmark.RIGHT_HIP.value].y]

knee_r = [landmarks[mp_pose.PoseLandmark.RIGHT_KNEE.value].x,landmarks[mp_pose.PoseLandmark.RIGHT_KNEE.value].y]

ankle_r = [landmarks[mp_pose.PoseLandmark.RIGHT_ANKLE.value].x,landmarks[mp_pose.PoseLandmark.RIGHT_ANKLE.value].y]

elbow_angle = calculate_angle(shoulder_l, elbow_l, wrist_l)

shoulder_angle = calculate_angle(elbow_l, shoulder_l, hip_l)

knee_angle = calculate_angle(hip_l, knee_l, ankle_l)

# Visualize angle

cv2.putText(frame, str(elbow_angle),

tuple(np.multiply(elbow_l, [640, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA)

cv2.putText(frame, str(shoulder_angle),

tuple(np.multiply(shoulder_l, [440, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA)

cv2.putText(frame, str(knee_angle),

tuple(np.multiply(knee_l, [240, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA)

# Counter logic based on exercise phase

if exercise_phase == 'Shoulder':

if shoulder_angle > 30:

shoulder_stage = "up"

if shoulder_angle > 100:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'Bring Shoulder Down to 90', (170, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 0

elif shoulder_angle < 80:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'Bring Shoulder Up to 90', (190, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 0

else:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'CORRECT !', (250, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 1

if shoulder_angle < 30 and shoulder_stage == 'up':

shoulder_stage = "down"

shoulder_counter += 1

print(f"Shoulder Counter: {shoulder_counter}")

correct_flag = 0

elif exercise_phase == 'Elbow':

if elbow_angle < 90:

elbow_stage = "down"

if elbow_angle > 20:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'Bring Elbow Up', (170, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 0

elif elbow_angle < 5:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'CORRECT !', (250, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 1

if elbow_angle > 90 and elbow_stage == 'down':

elbow_stage = "up"

elbow_counter += 1

print(f"Elbow Counter: {elbow_counter}")

correct_flag = 0

elif exercise_phase == 'Knee':

if knee_angle > 90:

knee_stage = "up"

if knee_angle < 135:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'Bring Knee Up', (170, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 0

elif elbow_angle > 145:

cv2.rectangle(frame, (120, 0), (500, 60), (255, 255, 255), -1)

cv2.putText(frame, 'CORRECT !', (250, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,0), 2, cv2.LINE_AA)

correct_flag = 1

if knee_angle < 90 and knee_stage == 'up':

knee_stage = "down"

knee_counter += 1

print(f"Knee Counter: {knee_counter}")

correct_flag = 0

# Check if exercise count reached 5

if exercise_phase == 'Shoulder' and shoulder_counter >= 5:

end_time_shoulder = time.time() # Stop the timer

time_taken_shoulder = round(end_time_shoulder - start_time, 2)

print(f'Time taken for Shoulder phase: {time_taken_shoulder} seconds')

exercise_number += 1 # Increment exercise number

exercise_phase = 'Elbow'

exercise_timer = 50

shoulder_counter = 0

start_time = time.time()

elif exercise_phase == 'Elbow' and elbow_counter >= 5:

end_time_elbow = time.time() # Stop the timer

time_taken_elbow = round(end_time_elbow - start_time, 2)

print(f'Time taken for Elbow phase: {time_taken_elbow} seconds')

exercise_number += 1 # Increment exercise number

exercise_phase = 'Knee'

exercise_timer = 50

elbow_counter = 0

start_time = time.time()

elif exercise_phase == 'Knee' and knee_counter >= 5:

end_time_knee = time.time() # Stop the timer

time_taken_knee = round(end_time_knee - start_time, 2)

print(f'Time taken for Knee phase: {time_taken_knee} seconds')

cv2.rectangle(frame, (120, 200), (500, 530), box_color, -1)

cv2.putText(frame, 'Rehab Session Ended', (140, 250),

cv2.FONT_HERSHEY_SIMPLEX, 1, text_color, 2, cv2.LINE_AA)

cv2.putText(frame, 'Congratulations!', (185, 300),

cv2.FONT_HERSHEY_SIMPLEX, 1, text_color, 2, cv2.LINE_AA)

elbow_text = f'{str(elbow_counter)} {elbow_stage}'

cv2.putText(frame, 'Sess Timing:', (185, 300),

cv2.FONT_HERSHEY_SIMPLEX, 1, text_color, 2, cv2.LINE_AA)

pass

# Render curl counter

# Setup status box

cv2.rectangle(frame, (0,0), (150,60), (0, 0, 0), -1)

text_offset = 20

if exercise_phase == 'Shoulder':

# Rep data for Shoulder

shoulder_text = f'{str(shoulder_counter)} {shoulder_stage}'

cv2.putText(frame, 'Shoulder Reps', (5,text_offset),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255,255,255), 1, cv2.LINE_AA)

cv2.putText(frame, shoulder_text, (5,text_offset + 20),

cv2.FONT_HERSHEY_SIMPLEX, 0.4, (255,255,255), 1, cv2.LINE_AA)

elif exercise_phase == 'Elbow':

# Rep data for Elbow

elbow_text = f'{str(elbow_counter)} {elbow_stage}'

cv2.putText(frame, 'Elbow Reps', (5,text_offset),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255,255,255), 1, cv2.LINE_AA)

cv2.putText(frame, elbow_text, (5,text_offset + 20),

cv2.FONT_HERSHEY_SIMPLEX, 0.4, (255,255,255), 1, cv2.LINE_AA)

elif exercise_phase == 'Knee':

# Rep data for Knee

knee_text = f'{str(knee_counter)} {knee_stage}'

cv2.putText(frame, 'Knee Reps', (5,text_offset),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255,255,255), 1, cv2.LINE_AA)

cv2.putText(frame, knee_text, (5,text_offset + 20),

cv2.FONT_HERSHEY_SIMPLEX, 0.4, (255,255,255), 1, cv2.LINE_AA)

# Render detections only if show_landmarks is True

if show_landmarks and correct_flag == 0:

mp_drawing.draw_landmarks(frame, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

elif show_landmarks and correct_flag == 1:

mp_drawing.draw_landmarks(frame, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(0, 117, 0), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(0, 255 ,0), thickness=2, circle_radius=2)

)

# Display frame

cv2.imshow('Mediapipe Feed', frame)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()Results & Discussion

RehabPal, highlighted its effectiveness in providing personalized guidance and real-time feedback for users, particularly the elderly and neurologically challenged patients for physical rehabilitation therapy. Through joint angle computations and continuous monitoring of joint landmarks during exercises such as shoulder extension, flexion, abduction, and other specified movements, the system successfully counted repetitions and offers feedback on the range of motion. The visual cues indicated correct form during each repetition contribute to the user’s understanding and execution of the exercises. Additionally, the program’s ability to guide users through a variety of exercises, transitioning seamlessly between them, ensured a comprehensive rehabilitation session.

In the testing phase, a total of five healthy elderly subjects, averaging 65 years with a narrow standard deviation of 1.7 years, evaluated the system by participating in a basic round of exercises. Subjective feedback revealed that the participants found the system more user-friendly compared to traditional audio or manual instructions provided by a therapist or family member in a home setting. All subjects successfully completed the entire session, expressing a preference for the virtual assistant over conventional methods. Their positive feedback emphasized feeling more motivated due to the engaging and attractive virtual feedback, and they also noted an improved sense of self-correction, enhancing their perception of maintaining correct form during the exercises. These insights from the user testing phase underscore the system’s potential to enhance user experience and motivation in a home rehabilitation setting.

Conclusion & Future Work

In conclusion, the development of RehabPal, a virtual rehabilitation and balance trainer utilizing MediaPipe, demonstrated promising results in providing real-time guidance and feedback for elderly and neurologically challenged individuals during exercise sessions. The positive feedback from user testing suggested that the system is user-friendly and motivational, contributing to a better exercise experience. Moving forward, future work could focus on expanding the range of supported exercises, incorporating machine learning for more personalized feedback, and integrating additional sensors or wearable devices to enhance the system’s accuracy and versatility. Additionally, collaborating with healthcare professionals for further clinical validation and refinement could solidify RehabPal as an effective tool for home-based rehabilitation, addressing the unique needs of diverse users.

Additional Documents

More detains about this project: Github Link.

Guide: –