Vision based Terrain

Classification for a

Cable-Driven Ankle Exoskeleton

Introduction

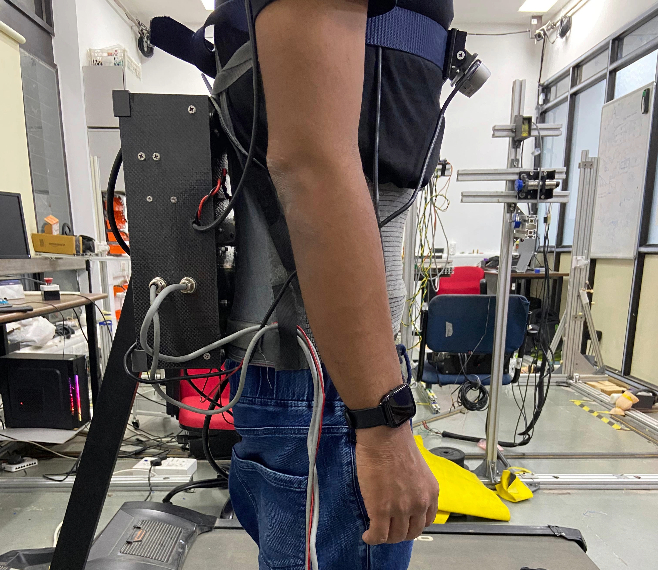

The goal of this study is to create a computer vision-based terrain classification system for adaptive assistance through an Ankle Exoskeleton, addressing dynamic gait adjustments in diverse terrains. I developed a real-time gait classification system using an Intel RealSense D435i camera to classify terrains (Overground, Upstairs, Downstairs). The model was optimized for deployment on NVIDIA Jetson Nano, and a high-level controller logic was implemented for applying the adaptive force profiles. Utilizing a novel segmented predictive classification ensured smoother transitions, I developed a high-level control logic fostering improved Human-Robot symbiosis by minimizing walking energy expenditure. Our focus was to explore timely collaboration in dynamic environments, enhancing the use of exoskeletons in Activities of Daily Living (ADL) for maximizing user benefits.

Methodology

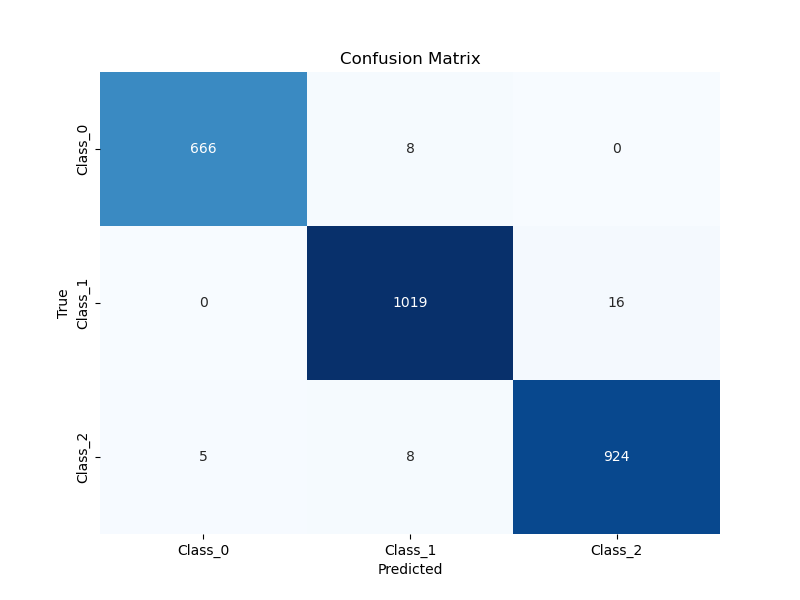

Data Collection & Processing : Utilized the Intel RealSense D435i camera to capture real-time data (RGB and Depth images) of individuals walking in various terrains, including Overground, Upstairs, and Downstairs. The collected data was later annotated to create a custom labeled dataset. A dataset of 12,000 images was formed for training the model. A segmentation logic was applied to split the labelled images (640×480 px) into 3 equal segments for training.

Model Training: The MobileNetV2 model was processed and trained on the custom dataset using PyTorch framework. Considering the platform’s computational constraints and ensuring real-time performance, the model was quantized for deployment on the NVIDIA Jetson Nano.

–

import torch

import torchvision

from torch import nn, optim

from torchvision import datasets, transforms, models

from torch.utils.data import Dataset, DataLoader

import copy

import time

from tqdm import tqdm

from custom_dataset import MyLazyDataset

# ARGS

BATCH_SIZE = 16

TEST_BATCH_SIZE = 16

DEVICE = 'cuda'

if __name__ == '__main__':

torch.multiprocessing.freeze_support()

def train_model(model, dataloaders, criterion, optimizer, scheduler, num_epochs=25, device='cuda'):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

print(f'Epoch {epoch}/{num_epochs - 1}')

print('-' * 10)

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss = 0.0

running_corrects = 0

for inputs, labels in tqdm(dataloaders[phase]):

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

if phase == 'train':

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

if phase == 'train':

scheduler.step()

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects.double() / dataset_sizes[phase]

print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print(f'Training complete in {time_elapsed // 60:.0f}m {time_elapsed % 60:.0f}s')

print(f'Best val Acc: {best_acc:.4f}')

model.load_state_dict(best_model_wts)

return model

def create_combined_model(model_fe, num_ftrs):

model_fe_features = nn.Sequential(

model_fe.features,

)

new_head = nn.Sequential(

nn.Dropout(p=0.35),

nn.Linear(num_ftrs, 3),

)

new_model = nn.Sequential(

model_fe_features,

nn.AdaptiveAvgPool2d(output_size=(1, 1)),

nn.Flatten(1),

new_head,

)

return new_model

data_transforms = {

'train': transforms.Compose([

# transforms.Resize(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

# transforms.Resize(224),

# transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

# Update the dataset path according to your structure

dataset = torchvision.datasets.ImageFolder('D:\Documents\Exp_Data\Data_Main')

train_dataset, test_dataset = torch.utils.data.random_split(dataset, [int(len(dataset) * 0.75), len(dataset) - int(len(dataset) * 0.75)])

dataset_sizes = {'train': len(train_dataset), 'val': len(test_dataset)}

train_dataset = MyLazyDataset(train_dataset, data_transforms['train'])

test_dataset = MyLazyDataset(test_dataset, data_transforms['val'])

train_sampler = torch.utils.data.RandomSampler(train_dataset)

test_sampler = torch.utils.data.SequentialSampler(test_dataset)

data_loader = torch.utils.data.DataLoader(

train_dataset, batch_size=BATCH_SIZE, sampler=train_sampler, num_workers=2, pin_memory=True

)

data_loader_test = torch.utils.data.DataLoader(

test_dataset, batch_size=TEST_BATCH_SIZE, sampler=test_sampler, num_workers=2, pin_memory=True

)

dataloaders = {'train': data_loader, 'val': data_loader_test}

model = models.mobilenet_v2(pretrained=True)

num_ftrs = model.classifier[1].in_features

model.to(DEVICE)

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(model.parameters(), lr=1e-3, momentum=0.9, weight_decay=0.1)

exp_lr_scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=5, gamma=0.3)

model_ft_tuned = train_model(model, dataloaders, criterion, optimizer_ft, exp_lr_scheduler, num_epochs=5, device=DEVICE)

# Print the architecture

print(model_ft_tuned)

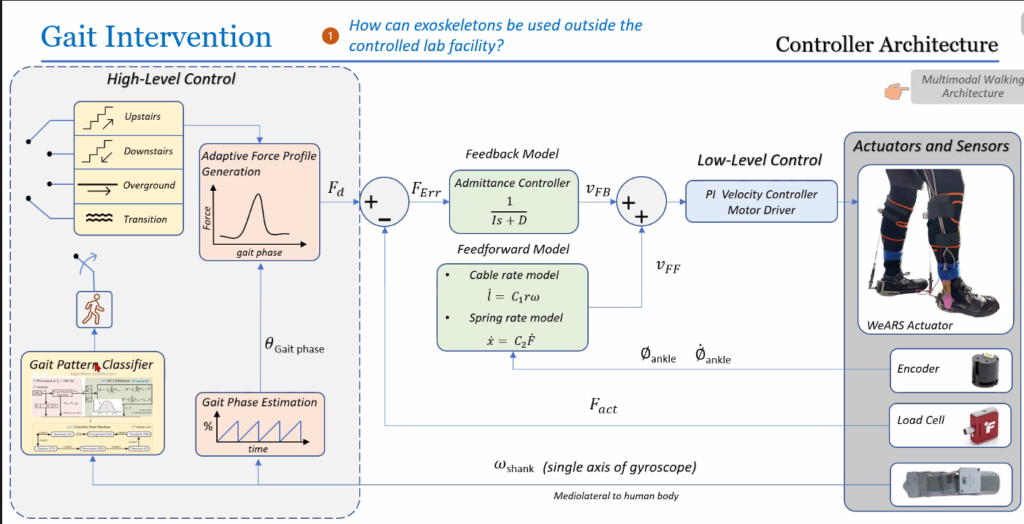

torch.save(model_ft_tuned.state_dict(), 'D:\Documents\Exp_Data\finaldatamain1.pt')Controller Design: A high-level controller logic was integrated in LabVIEW for a cable-driven exoskeleton, incorporating inputs from the RealSense camera to adapt the exoskeleton’s behavior based on the classified gait patterns (for different terrains).

A combination of an admittance control, feed-forward control, and an iterative controller was used to meet the quick motion of the ankle during walking. The admittance controller presented a virtual dynamics, modelled as inertia (I) and damping (D), to the wearer. This interaction controlled the force error, Ferror(s), as input and generated reference velocity, vF B(s), as output. The feed-forward control modelled the cable and spring rate to compensate for the ankle motion and the deflection of the attached spring and brace, respectively. An iterative controller, which counters the repetitive force errors of walking, was implemented to improve the force tracking by the admittance and feed-forward controllers.

Low Level Control: The velocity set-point provided by the high-level controller was tracked by the low-level controller, which included a PI based feedback control implemented on the Faulhaber motion controller. The low-level controller drives the motor and pulley to generate the cable velocity values required to apply the desired ankle torque.

Experiment Protocol: For the first phase, a cohort of 7 subjects participated in the experiment, traversing a predefined path encompassing various terrains while equipped with the Ankle Exoskeleton. Concurrently, a chest-mounted camera captured real-time data. The study focused on comparing the temporal variance between gait classification derived from Inertial Measurement Unit (IMU) readings, based on biomechanical markers, and the corresponding actuation executed by the camera and the integrated model.

Results & Discussion

–

–

Conclusion & Future Work

–

Additional Documents

–

Guide: Vineet Vashista | Collaborators: Dhyey Shah, Yogesh Singh